Stories

Explore how we’re putting our ambitions into action every day.

Sustainability has been a core value for Google since our founding, and our environmental reporting and thinking has evolved over the years. Please refer to our latest Environmental Report for our most current methodology and approach.

Filter by:

Topics

Collaborator

-

February 2024

Making proactive safer chemistry a standard operating procedure

-

January 2024

Tackling Single-Use Plastics: How 5 innovative companies rise to the challenge

-

January 2024

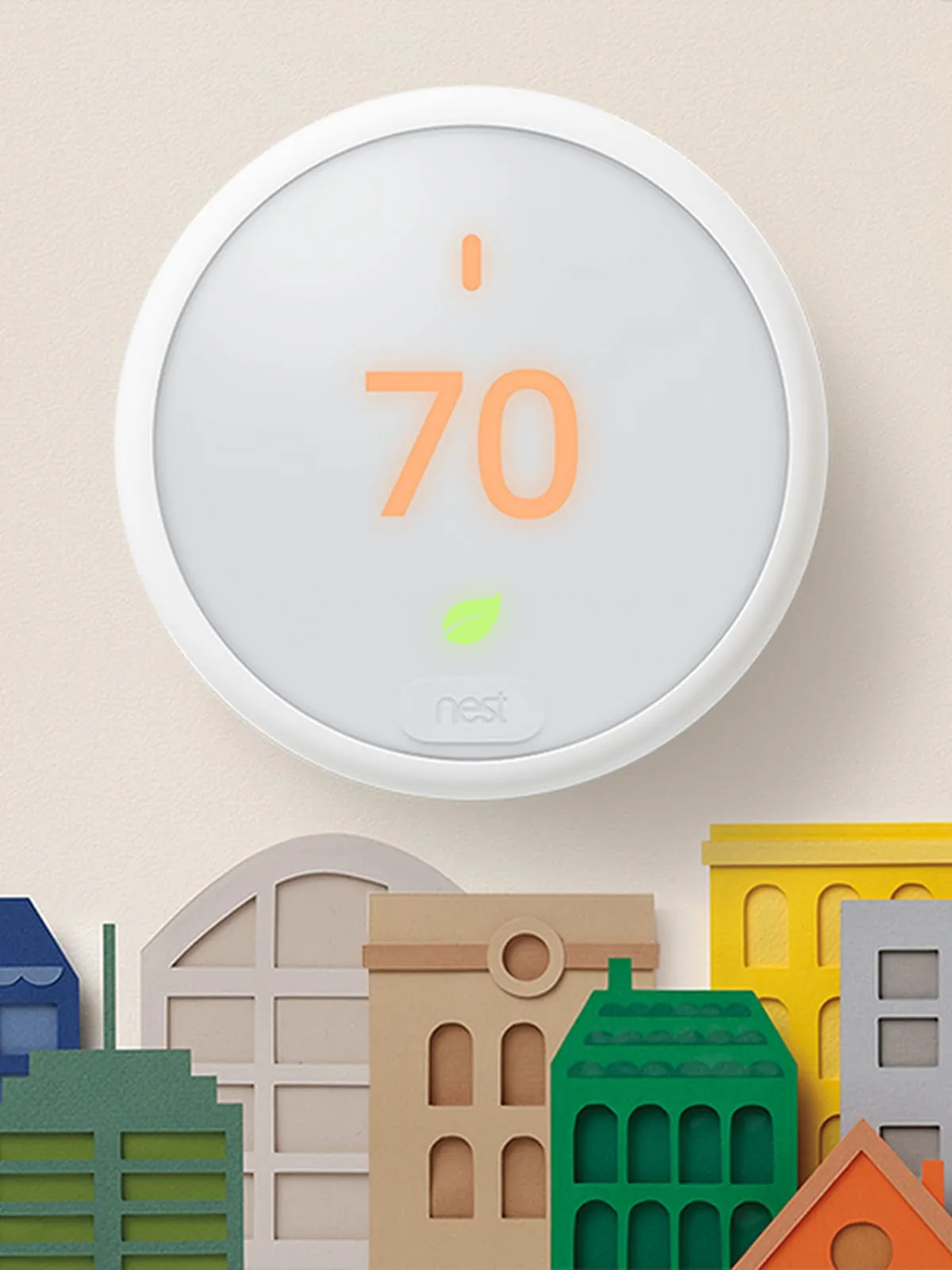

One click away: Your home’s thermostat can save energy and the grid

-

September 2023

CircularNet: How Recykal built Asia’s largest circular economy marketplace using Google AI

-

September 2023

Reducing Waste and Empowering Students: How an Oklahoma school is breathing new life into Chromebooks

-

September 2023

A proactive approach to safer product materials

-

August 2022

Empowering opportunity: Supporting peace and preservation in Garamba

-

August 2022

Helping to protect our people—and planet—through safer chemistry and responsible management

-

August 2022

Rethinking supply chain packaging to reduce upstream waste

-

August 2022

Lowering emotional barriers to e‑waste recycling for supply chain sustainability

-

November 2021

Using blockchain to advance minerals traceability with LuNa Smelter

-

March 2021

Wildlife Insights helps capture the beauty of biodiversity, as well as its fragility

-

December 2019

Interactive tool shows how small changes can make a big impact

-

January 2021

The alchemy of aluminum: Pioneering a new recycled alloy for our products

-

January 2021

Rise to power: Congolese artists speak their truth in Ukweli documentary

-

November 2020

Journey through our supply chain

-

December 2016

100% renewable is just the beginning

-

February 2020

All for one, one for Earth: Using geospatial tools to create positive change in India

-

October 2019

Building an energy-efficient, low-carbon supply chain

-

February 2018

Capturing value from waste in upstate New York

-

December 2016

Earth Engine creates a living map of forest loss

-

September 2018

Ecologically focused landscapes are coming to life on Google campuses

-

October 2019

From pilot to power: Gathering clean energy momentum in the Congo

-

December 2016

Greening the grid: how Google buys renewable energy

-

August 2019

How to build an event booth out of old barns and bicycle tires

-

October 2018

Learning by listening: Hearing from workers influences our strategy

-

October 2018

Made by me

-

October 2017

Mapping the invisible: Street View cars add air pollution sensors

-

October 2018

More than mining: Supporting economic opportunity in the Congo with clean energy

-

December 2018

Nest uses energy-saving technology to relieve the low-income energy burden

-

October 2017

Northern exposure: How our Nordic renewable deals are reaping rewards

-

September 2018

Oceans of data: tracking illegal fishing over 140 million square miles

-

March 2018

Once is never enough

-

October 2019

Partnering with suppliers to create better recycled plastic

-

September 2018

Positive energy: Belgian site becomes first Google data center to add on-site solar

-

December 2016

Reaching our solar potential, one rooftop at a time

-

December 2016

Recipe for sustainability: Why Google cafes love ugly produce

-

October 2018

Revealing the realities of mining with VR

-

October 2018

Safer chemistry for healthy manufacturing

-

October 2019

Supply chain meets blockchain for end-to-end mineral tracking

-

September 2019

The Internet is 24x7—carbon-free energy should be too

-

September 2019

The journey toward healthier materials

-

September 2018

The more you know: Turning environmental insights into action

-

June 2018

Transparency unleashed: How Global Fishing Watch is transforming fishery management

-

September 2018

Unlocking access to corporate renewable energy purchasing in Taiwan

![[stories_overview] Recykal_Google Sustainability Image_900x506 pix_02.png](https://www.gstatic.com/marketing-cms/assets/images/83/64/734bb09149d0bb5b1ca133e23c77/recykal-google-sustainability-image-900x506-pix-02.png)